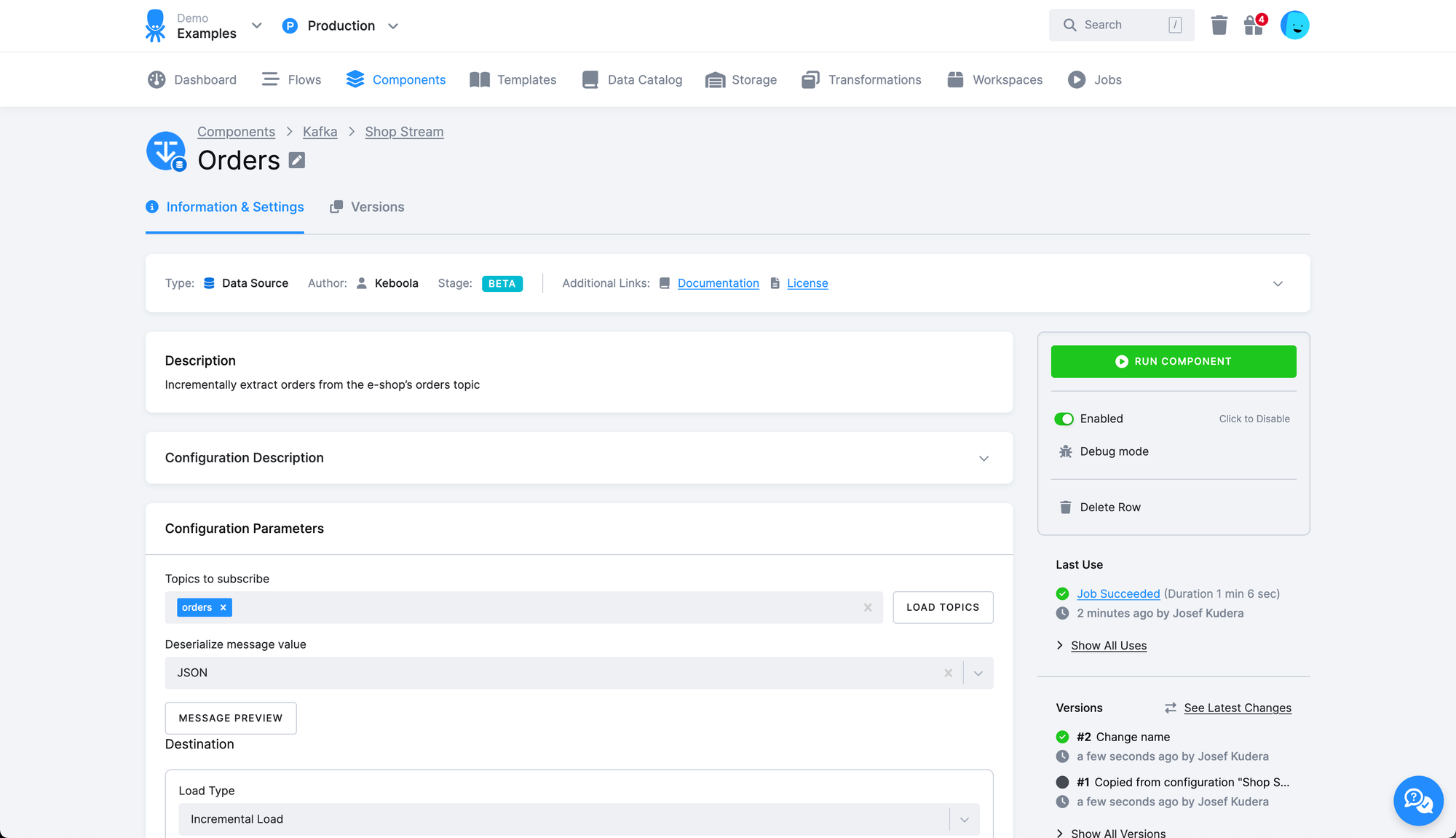

Components

Kafka Batch Extractor & Writer

We’re introducing a new, cost-efficient way to integrate with Kafka—delivering scalable, reliable streaming while reducing infrastructure overhead.

The new Kafka Extractor (keboola.ex-kafka) and Kafka Writer (keboola.wr-kafka) - now in beta - allows batch integration of Keboola with Kafka. This approach can be a better fit for use cases like periodic BI data refreshes or scheduled analytics jobs, where continuous real-time streaming with Keboola Data Streams may not be necessary.

Kafka Batch Extractor (Consumer)

- Security protocols: Supports PLAINTEXT, SASL_PLAINTEXT, and SSL.

- Payload handling: Deserialize Text, JSON or Avro.

- Schema support: Avro schemas can be provided as a string or fetched from Confluent Schema Registry.

- State management: Maintains its own committed offsets, independent of Kafka’s consumer groups.

- Advanced options: Pass any parameters to the consumer, e.g., manually override starting offsets, enable debug logging, etc.

Kafka Batch Writer (Producer)

- Security protocols: Supports PLAINTEXT, SASL_PLAINTEXT, and SSL.

- Serialization formats: Send messages as text, JSON, or Avro (schema from string or Schema Registry).

- Message customization:

- Set message key from a table column.

- Include selected table columns as message value.

- Schema flexibility: Supports both user-provided Avro schema strings and Schema Registry integration.

- Advanced options: Pass any parameters to the producer.

These components allow you to consume and produce Kafka data in scheduled batches, giving you the flexibility of Kafka without the cost of always-on streaming- ideal for BI and reporting pipelines, or reverse ETL when writing data from back to Kafka topic.