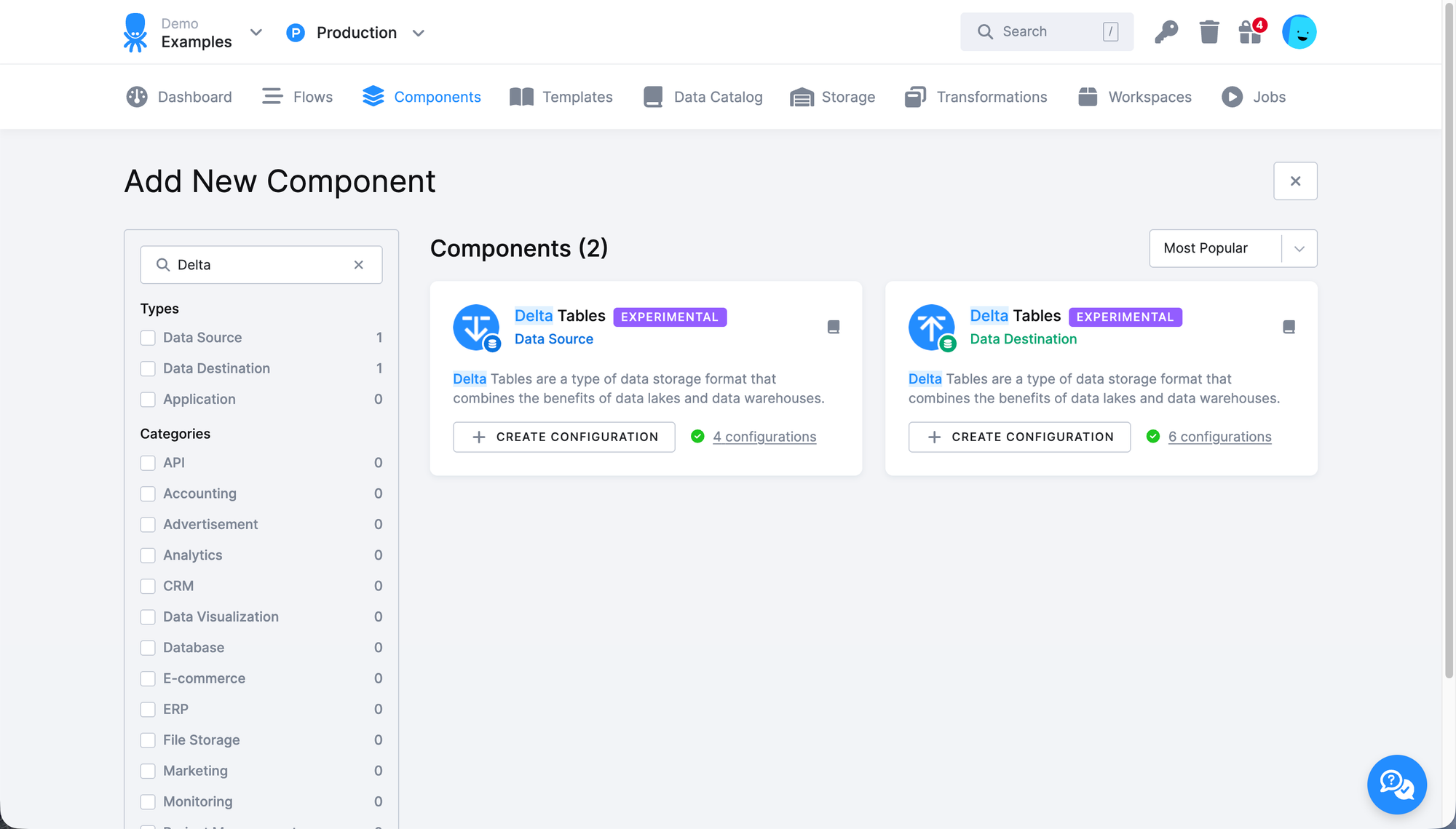

Delta Tables Support via New Extractor and Writer

We’ve released new experimental components for reading from and writing to Delta Tables - supporting both blob storage and Unity Catalog access.

The Delta Tables Extractor and Delta Tables Writer are now available as experimental components. They enable efficient data integration with Delta Lake, whether you're working directly with object storage (S3, Azure Blob, GCS) or through Unity Catalog in Databricks.

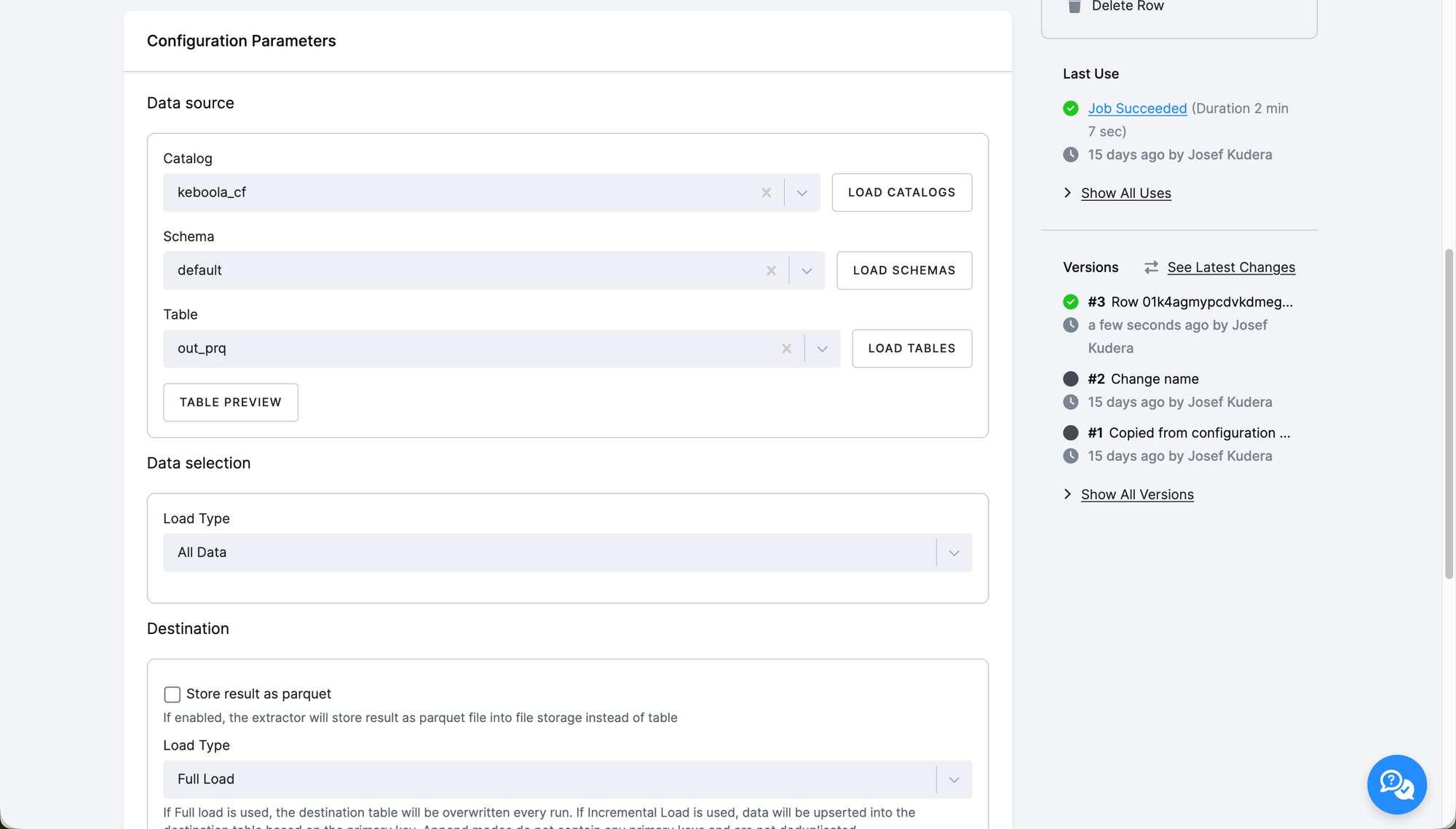

Delta Tables Extractor

The extractor offers a flexible way to pull data from Delta Tables:

- Flexible data selection: Extract full tables, select specific columns, or use custom SQL queries.

- Multiple load types: Supports full loads, incremental loads, and append mode.

- Supports both access modes: Unity Catalog and blob storage access.

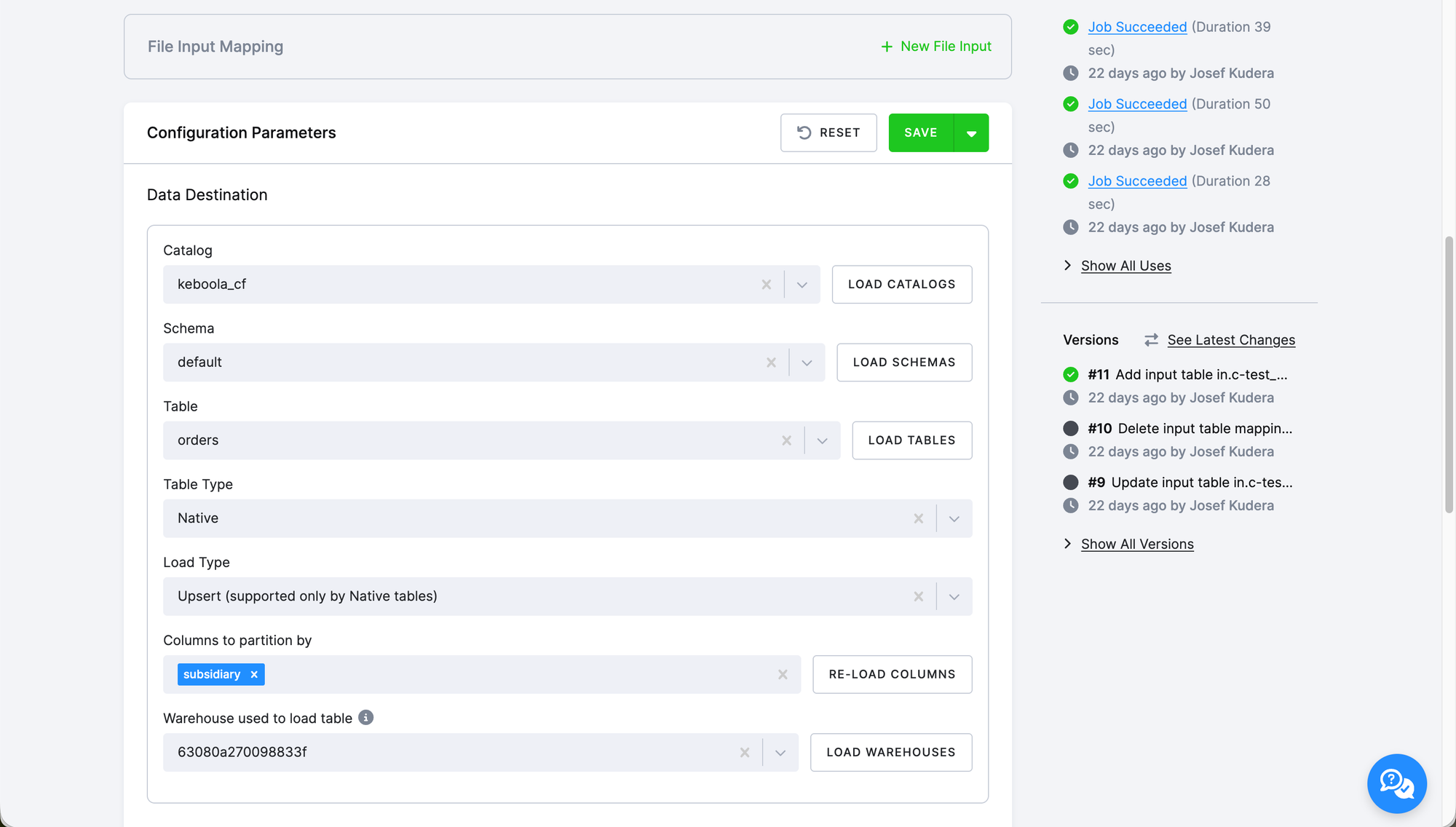

Delta Tables Writer

The writer simplifies writing data back into Delta Tables, with robust configuration options:

- Input options: Write from storage tables or Parquet files.

- Multiple write modes: Choose from append, overwrite, upsert, or error on existing records.

- Partitioning support for optimized performance.

- Storage flexibility: Supports both external tables (via blob storage) and native Databricks tables (via DBX Warehouse).

- Performance tuning: Configurable batch size and insertion order for external tables.

- Optional Parquet output: Store results as Parquet files for efficient downstream use.

These components are ideal for teams working with Delta Lake as a central part of their data architecture. As experimental components, we welcome your feedback as we continue to evolve their capabilities.